The ground on which politics happens has changed, yet our political language has not kept up.

A Russian-fed misinformation campaign across Facebook and Twitter, plus powerful data-targeting techniques pioneered by the Obama campaign, helped propel Donald Trump into the White House.

Big tech’s political fortunes have dimmed accordingly. It is at risk of joining big tobacco, big pharma, and big oil as a bona fide corporate pariah.

A growing number of thinktanks, regulators and journalists are grappling with the question of how to best regulate big tech. But we won’t fix it with better public policy alone. We also need better language. We need new metaphors, new discourse, a new set of symbols to illustrate how these companies are rewiring our world, and how we as a democracy can respond.

Tech companies themselves are very aware of the importance of language. They spend hundreds of millions of dollars on lobbying and PR to preserve control of the narrative.

In its public messaging, Facebook describes itself as a “global community”, dedicated to deepening relationships and bringing loved ones closer together. Twitter is “the free speech wing of the free speech party”, where voices from across the world can engage with each other in a free flow of ideas. Google “organizes the world’s information”, serving it up with the benevolence of a librarian.

In light of the last election and combined market valuations that stretch well into the trillions of dollars, this techno-utopian rhetoric strikes a disingenuous chord. What would a better language look like?

Most of the regulatory conversations about platforms try to draw analogies to other forms of media. They compare political advertising on Facebook to political advertising on television, or they argue that platforms should be treated like publishers and bear liability for what they publish. However, there’s something qualitatively different about the scale and the reach of these new platforms that opens a genuinely new set of concerns.

At last count, Facebook had more than 200 million users in the United States, meaning that its “community” overlaps to a profound degree with the national community at large. It is on platforms like Facebook where much of our public and private life now takes place. A few big companies now operate enormous, privately owned public spheres.

The ground on which politics happens has changed – yet our political language has not kept up. We lack a common public vocabulary to express our anxieties and to clearly name what has changed about how we communicate and receive our information about the world.

The biggest insight in George Orwell’s 1984 was not about the role of surveillance in totalitarian regimes, but rather the primacy of language. In the book’s dystopian world, the Party continually revises the dictionary, removing words to extinguish the expressive potential of language. Their goal is to make it impossible for vague senses of dread and dissatisfaction to find linguistic form and evolve into politically actionable concepts. If you can’t name and describe an injustice, then you will have an extremely difficult time fighting it.

In the late 19th century, rapid industrialization changed the social fabric of the United States and concentrated immense economic power in the hands of a few individuals. The first laws to regulate industrial monopolies came on the books in the 1860s to protect farmers from railroad price-gouging, but it wasn’t until 1911 that the federal government used the Sherman Antitrust Act to break up one of the country’s biggest monopolies: Standard Oil.

In the intervening 50 years, a tremendous amount of political work had to happen. Among other things, this involved broad-based consciousness building: it was essential to get the public to understand how these historically unprecedented industrial monopolies were bad for ordinary people and how to reassert control over them.

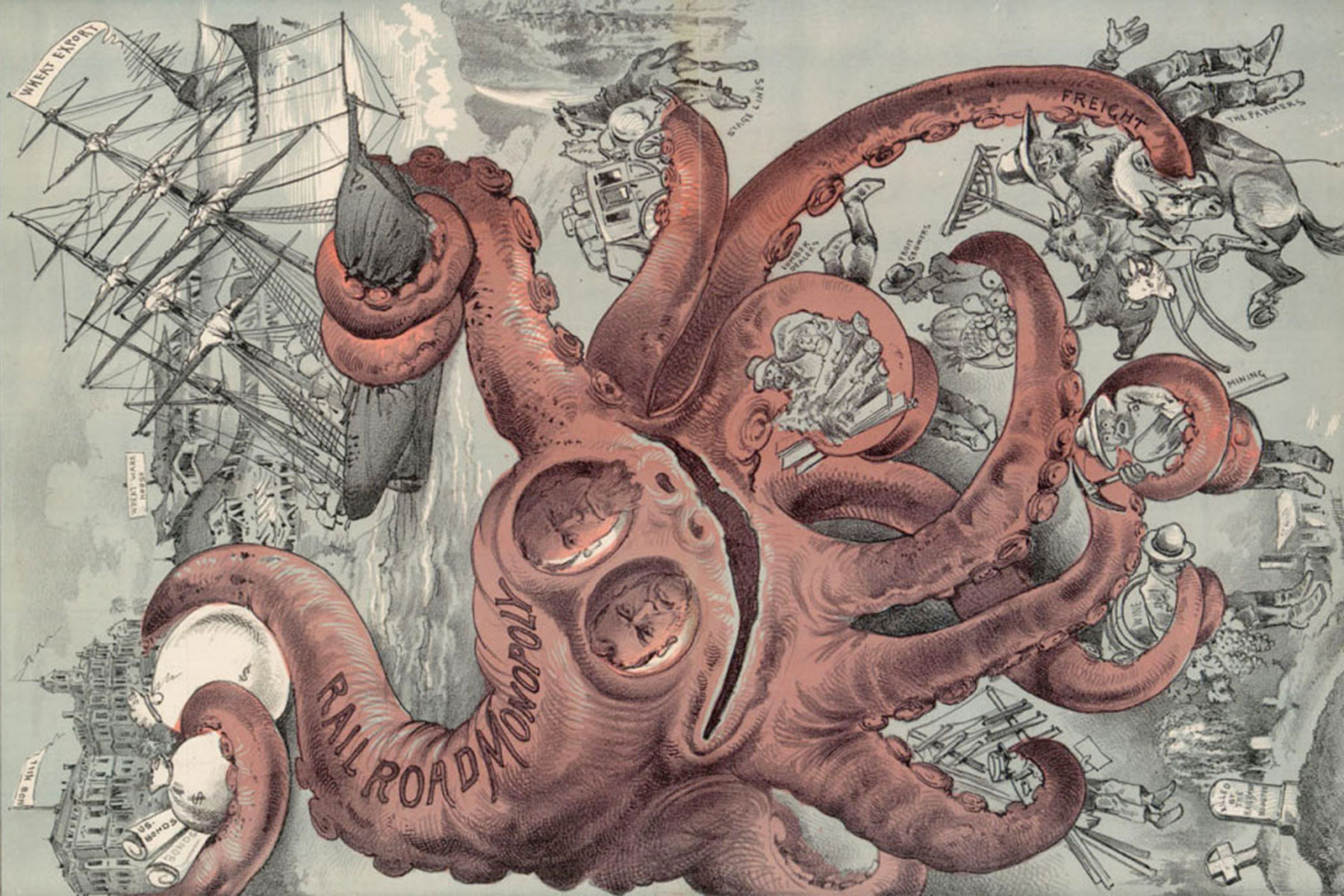

Political cartoons offered an indispensable tool by providing a rich set of symbols for thinking about the problems with unaccountable and overly centralized corporate power. The era’s big industrialists – John Rockefeller, Cornelius Vanderbilt, Andrew Carnegie – were typically depicted as plump men in frock coats, resting comfortably on the backs of the working class while Washington politicians snuggled in their pockets. And the most popular symbol of monopoly was the octopus, its tentacles pulling small-town industry, savings banks, railroads, and Congress itself into its clutches.

The octopus was a brilliant metaphor. It provided a simple way to understand the deep interconnection between complex political and economic forces – while viscerally expressing why everyone was feeling so squeezed. Its critique immediately connected.

What would today’s octopus look like?

In recent years, the “alt-right” has been particularly effective at minting new symbols that capture big ideas that are difficult to articulate. Chief among them, perhaps, is the “red pill,” which dragged the perception-shifting plot device from The Matrix through the fetid and paranoid misogyny of “men’s rights activist” forums into a politically actionable concept.

The red pill is a toxic idea, but it is also a powerful one. It provides a new way to talk about how ideology shapes the world and extends an invitation to consider how the world could be radically different.

The left, unfortunately, has been lacking in concepts of similar reach. To develop them, we will need a way of talking about big tech that is viscerally affecting, that intuitively communicates what these technologies do, and that wrenches open a way to imagine a better future.

A hint of where we may find this new political language recently appeared in the form of the White Collar Crime Risk Zones app. It applies the same techniques used in predictive-policing algorithms – profoundly flawed technologies which tend to criminalize being a poor person of color – to white-collar crime.

Seeing the business districts of Manhattan and San Francisco blaze red, with mugshots of suspects generated from LinkedIn profile photos of largely white professionals, makes its point in short order. It seems absurd – until you realize that it is exactly what happens in poor communities of color, with crushing consequences. What if police started frisking white guys in suits downtown?

The White Collar Crime Risk Zones app is effective because it functions as a kind of rhetorical software: not designed to collect data or sell advertising, but to make an argument. And while software will never be the solution to our political and social problems, it may, to hijack a slogan of big tech, at least provide a way to “think different.”

Marc DaCosta and K. Sabeel Rahman

George B. Luks and George Frederick Keller

- Originally published by The Guardian as How to fix big tech? We need the right language to describe it first

- A longer version of this article appears in Logic as The New Octopus

Help deliver the independent journalism that the world needs, make a contribution of support to The Guardian.